- #Download spark with hadoop install#

- #Download spark with hadoop update#

- #Download spark with hadoop windows 10#

Open the history server UI (by default: ) in browser, you should be able to view all the jobs submitted. Run the following command to start Spark history server: $SPARK_HOME/sbin/start-history-server.sh # -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three" Spark history server logDirectory hdfs://localhost:19000/spark-event-logs

# This is useful for setting default environmental settings. # Default system properties included when running spark-submit. # See the License for the specific language governing permissions and # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # distributed under the License is distributed on an "AS IS" BASIS, # Unless required by applicable law or agreed to in writing, software # (the "License") you may not use this file except in compliance with # The ASF licenses this file to You under the Apache License, Version 2.0 # this work for additional information regarding copyright ownership. # Licensed to the Apache Software Foundation (ASF) under one or more In my following configuration, I added event log directory and also Spark history log directory. These configurations will be added when Spark jobs are submitted.

#Download spark with hadoop update#

Update the config file with default Spark configurations. Run the following command to create a spark default config file using the template: cp nf If you’ve configured Hive in WSL, follow the steps below to enable Hive support in Spark.Ĭopy the Hadoop core-site.xml and hdfs-site.xml and Hive hive-site.xml configuration files into Spark configuration folder: cp $HADOOP_HOME/etc/hadoop/core-site.xml $SPARK_HOME/conf/Ĭp $HADOOP_HOME/etc/hadoop/hdfs-site.xml $SPARK_HOME/conf/Ĭp $HIVE_HOME/conf/hive-site.xml $SPARK_HOME/conf/Īnd then you can run Spark with Hive support (enableHiveSupport function): from pyspark.sql import SparkSessionįor more details, please refer to this page: Read Data from Hive in Spark 1.x and 2.x. In this website, I’ve provided many Spark examples. Run Spark Pi example via the following command: run-example SparkPi 10 The interface looks like the following screenshot: Run the following command to start Spark shell: spark-shell Source the modified file to make it effective: bashrc file: vi ~/.bashrcĪdd the following lines to the end of the file: export SPARK_HOME=~/hadoop/spark-2.4.3-bin-hadoop2.7

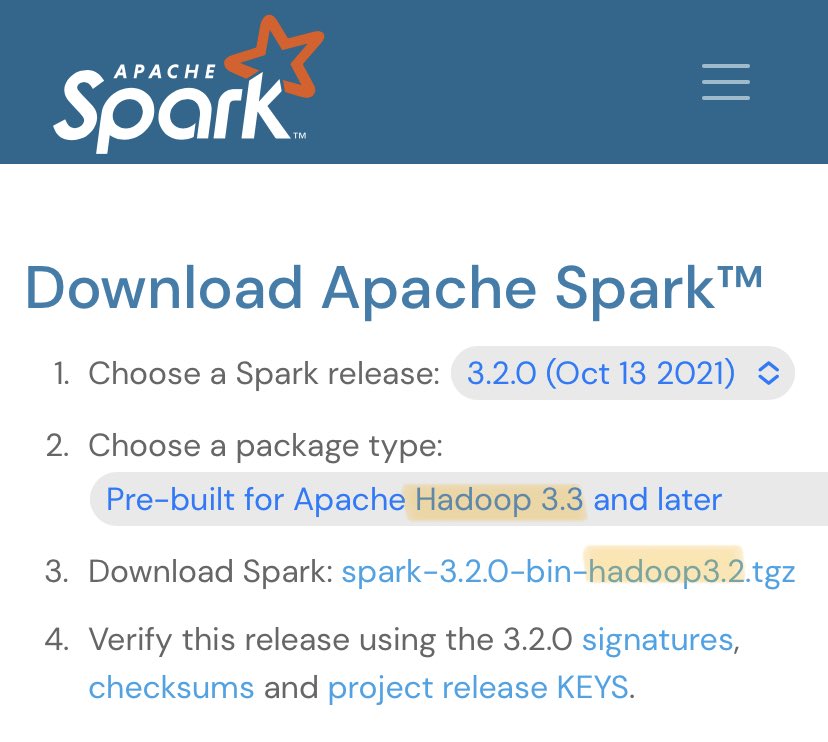

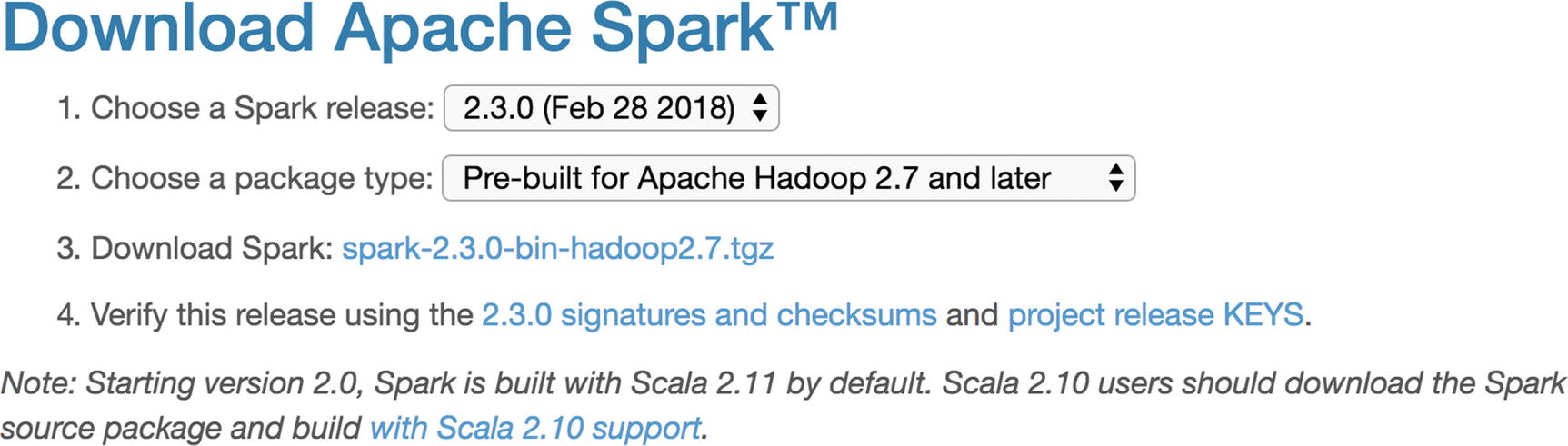

Setup SPARK_HOME environment variables and also add the bin subfolder into PATH variable. Unpack the package using the following command: tar -xvzf spark-2.4.3-bin-hadoop2.7.tgz -C ~/hadoop Setup environment variables Visit Downloads page on Spark website to find the download URL.ĭownload the binary package using the following command: wget Unzip the binary package

#Download spark with hadoop install#

Now let’s start to install Apache Spark 2.4.3 in WSL. I also recommend you to install Hadoop 3.2.0 on your WSL following the second page.Īfter the above installation, your WSL should already have OpenJDK 1.8 installed.

#Download spark with hadoop windows 10#

Install Hadoop 3.2.0 on Windows 10 using Windows Subsystem for Linux (WSL).Install Windows Subsystem for Linux on a Non-System Drive.Prerequisitesįollow either of the following pages to install WSL in a system or non-system drive on your Windows 10. This pages summarizes the steps to install the latest version 2.4.3 of Apache Spark on Windows 10 via Windows Subsystem for Linux (WSL).

0 kommentar(er)

0 kommentar(er)